Designed and Built a Deep Learning Library Called Needle

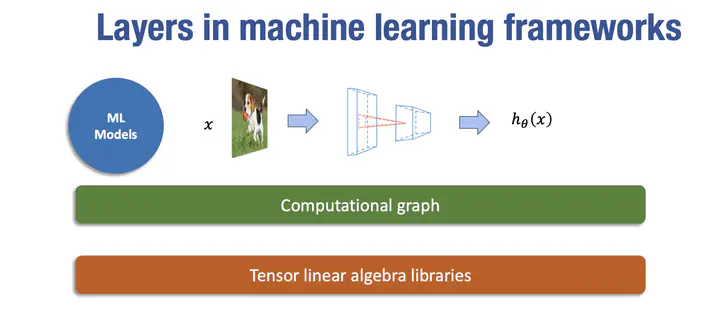

Completed an online course on deep learning systems offered by CMU in order to delve into the internals of PyTorch and TensorFlow, and understand how they function at a fundamental level.

Designed and built a deep learning library called Needle, capable of efficient GPU-based operations, automatic differentiation of all implemented functions, and the necessary modules to support parameterized layers, loss functions, data loaders, and optimizers.

Project 0

Build a basic softmax regression algorithm, plus a simple two-layer neural network. Create these implementations both in native Python (using the numpy library), and (for softmax regression) in native C/C++.

✅ A basic add function

✅ Loading MNIST data: parse_mnist function

✅Softmax loss: softmax_loss function

✅Stochastic gradient descent for softmax regression

✅SGD for a two-layer neural network

✅Softmax regression in C++

Project 1

Build a basic automatic differentiation framework, then use this to re-implement the simple two-layer neural network we used for the MNIST digit classification problem in HW0.

✅Implementing forward computation

- ✅PowerScalar

- ✅EWiseDiv

- ✅DivScalar

- ✅MatMul

- ✅Summation

- ✅BroadcastTo

- ✅Reshape

- ✅Negate

- ✅Transpose

✅Implementing backward computation

- ✅EWiseDiv

- ✅DivScalar

- ✅MatMul

- ✅Summation

- ✅BroadcastTo

- ✅Reshape

- ✅Negate

- ✅Transpose

✅Topological sort: allow us to traverse through (forward or backward) the computation graph, computing gradients along the way

✅Implementing reverse mode differentiation

✅Softmax loss

✅SGD for a two-layer neural network

Project 2

Implement a neural network library in the needle framework.

✅Implement a few different methods for weight initialization

✅Implement additional modules

- ✅Linear:

needle.nn.Linearclass - ✅ReLU:

needle.nn.ReLUclass - ✅Sequential:

needle.nn.Sequentialclass - ✅LogSumExp:

needle.ops.LogSumExpclass - ✅SoftmaxLoss:

needle.nn.SoftmaxLossclass - ✅LayerNorm1d:

needle.nn.LayerNorm1dclass - ✅Flatten:

needle.nn.Flattenclass - ✅BatchNorm1d:

needle.nn.BatchNorm1dclass - ✅Dropout:

needle.nn.Dropoutclass - ✅Residual:

needle.nn.Residualclass

✅Implement the step function of the following optimizers.

- ✅SGD:

needle.optim.SGDclass - ✅Adam:

needle.optim.Adamclass

✅Implement two data primitives: needle.data.DataLoader and needle.data.Dataset

- ✅Transformations:

RandomFlipHorizontalfunction andRandomFlipHorizontalclass - ✅Dataset:

needle.data.MNISTDatasetclass - ✅Dataloader:

needle.data.Dataloaderclass

✅Build and train an MLP ResNet

- ✅ResidualBlock:

ResidualBlockfunction - ✅MLPResNet:

MLPResNetfunction - ✅Epoch:

epochfunction - ✅Train Mnist:

train_mnistfunction

Project 3

Build a simple backing library for the processing that underlies most deep learning systems: the n-dimensional array (a.k.a. the NDArray).

✅Python array operations

- ✅reshape:

reshapefunction - ✅permute:

permutefunction